Github Actions Components

Understanding the five core building blocks that power CI/CD workflows.

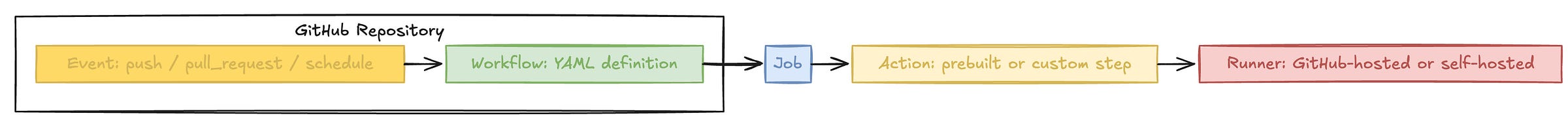

GitHub Actions orchestrates complex CI/CD workflows through five interconnected components: workflows, events, jobs, actions, and runners. Each component serves a specific purpose, and together they create a powerful automation platform for data teams.

Let's explore what each component does and how they work together in practice.

Workflows: Your Automation Blueprint

Think of workflows as the master conductor of your CI/CD. These YAML files live in your .github/workflows/ directory and define exactly what happens when your automation kicks off. Every workflow tells a complete story—from the initial trigger through the final deployment.

When you're dealing with complex CI/CD automations, workflows become your single source of truth. Instead of scattered scripts and manual processes, everything lives in version-controlled files alongside your code. It just follows the blueprint you've defined, coordinating every moving piece with precision.

Events: The Spark That Ignites Everything

Events transform your repository from passive storage into a reactive system. They're the triggers that wake up your workflows and set everything in motion. The most common trigger you'll encounter is the humble push event—every time code hits your repository, it can spark a cascade of automation.

But events go far beyond simple code changes. You can schedule workflows to run on cron patterns, perfect for those weekly model retraining cycles or daily data quality checks. Manual triggers let you deploy models on demand or run ad-hoc analysis jobs. External webhooks can even trigger workflows when your data sources update or monitoring systems detect anomalies.

This event-driven architecture aligns perfectly with modern data practices. Your infrastructure responds to changes automatically, whether that's new data arriving, code being updated, or performance metrics crossing thresholds.

Jobs: Breaking Down Complexity

Jobs are where workflows get practical. They represent the logical phases of your automation process. Each job runs in its own isolated environment, which means you can safely experiment without worrying about one step contaminating another.

The real power emerges when you consider how jobs can run in parallel or sequence. Jobs also serve as natural checkpoint boundaries. This conditional execution prevents expensive mistakes while maintaining pipeline efficiency.

Actions: The Workhorses

If jobs are the phases of your pipeline, actions are the individual tasks that make things happen. They're the atomic units of functionality—checking out code, setting up Python environments, running tests, deploying applications, or sending notifications.

The GitHub Actions marketplace has transformed how we think about automation. Instead of writing custom scripts for every common task, you can leverage thousands of pre-built actions maintained by the community. Need to set up a specific Python version with dependency caching? There's an action for that. Want to deploy to AWS or configure cloud credentials? The marketplace has you covered.

But actions aren't limited to the marketplace. You can create custom actions that encapsulate your team's specific workflows—data validation routines, model deployment patterns, or reporting processes. This reusability means your automation patterns can spread across projects and teams effortlessly.

Runners: The Compute Foundation

Runners provide the actual compute environment where your automation executes. They're the virtual machines, containers, or physical hardware that power your workflows. For data teams, runner selection often determines whether your pipeline succeeds or fails.

GitHub's hosted runners work brilliantly for most data validation, testing, and lightweight processing tasks. They're immediately available, fully managed, and come with common tools pre-installed. But when you need serious computational power—GPU clusters for model training, high-memory instances for big data processing, or specialized environments with custom software—self-hosted runners become essential.

The latest updates show GitHub's commitment to keeping runners current and capable. Ubuntu-latest is migrating to Ubuntu 24, ensuring your data workflows have access to the newest tools and performance improvements.

How Everything Connects

A GitHub Actions workflow begins when an event occurs in a repository, such as a push to a branch, the opening of a pull request, or a scheduled trigger. This event activates a workflow — a YAML configuration stored in the .github/workflows directory — which defines the jobs to run, the conditions under which they run, and the steps they contain.

Each job is a collection of actions and shell commands that execute sequentially on a runner, which is the actual machine performing the work. Actions are reusable units — either provided by GitHub, developed by the community, or custom-built — that handle tasks like checking out code, setting up environments, or deploying applications. Jobs can run in sequence or in parallel, and runners can be GitHub-hosted for simplicity or self-hosted for more control over the environment. Together, these components form a complete automation pipeline from trigger to execution.

Why This Architecture Matters

This component-based design gives you something precious in the data world: predictable automation that scales with complexity. Each component has a clear responsibility, making your workflows easier to understand, debug, and maintain. When something goes wrong—and it will—you can pinpoint exactly which component failed and why.

The modularity pays dividends as our team grows. Actions become reusable building blocks that spread across projects. Job patterns emerge that handle common data processing tasks. Workflows become templates that new team members can adapt rather than build from scratch.

Most importantly, this architecture gives you the flexibility to handle both simple and complex scenarios with the same underlying framework. Whether you're running basic data quality checks or orchestrating multi-stage ML pipelines across cloud regions, the same five components provide the foundation.

Conclusion

Understanding these five components is our foundation for building sophisticated data automation. In the upcoming articles, we'll dive deep into each component individually—exploring advanced patterns, real-world implementation strategies, and the specific techniques that separate good data pipelines from great ones.

Each component has nuances and capabilities that become apparent only when you dig deeper. Workflows have advanced orchestration patterns. Events support complex filtering and conditional logic. Jobs can be matrixed and parallelized in sophisticated ways. Actions can be composed and customized for your exact needs. Runners can be optimized for performance and cost.